Summarizing Text Data Using NLP

Text summarization is a vital Natural Language Processing (NLP) task which is certain to have a significant impact on our lives. With the evolution of the digital world and its consequent impact at the developing publishing industry, dedicating time to completely read an article, document, or book to decide its relevance is no longer a possible option, especially considering time scarcity. Further, with the increasing range of articles being published observed by the digitization of traditional print publications, it has become nearly impossible to keep record of incrementing publishing companies available on the internet. This is where textual content summarization can help reduce the texts with less time.

Text Summarization Techniques

- Abstractive Summarization

Abstractive summarization focuses on producing a summary of the input textual content in a paraphrased format that takes all statistics into account. This is unique from what we see with extractive summarization as abstractive summarization does not generate a paragraph made up of each “precise pleasant sentence”, however a concise summary of the everything.

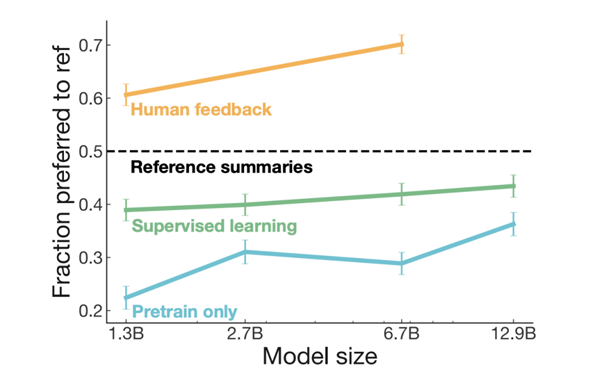

- Book Summarization with Human Feedback

OpenAI has released a brand-new summarization pipeline centered on using reinforcement learning and recursive task decomposition to summarize entire books. They use a fine-tuned GPT-3 model that is created with many summaries created via humans. The model works to summarize small sections of the book after which recursively summarize the generated summaries into a final full e book precis. They end up using numerous models trained to work on smaller parts of the bigger task that assist human beings provide exceptional comments on the summaries. The model finally ends up achieving near human degree summarization accuracy for 5% of the books and simply slightly less than human level 15% of the time.

nt tech trendsfor 2022.

-

-

-

-

Extractive Summarization with BERTSUM

-

-

-

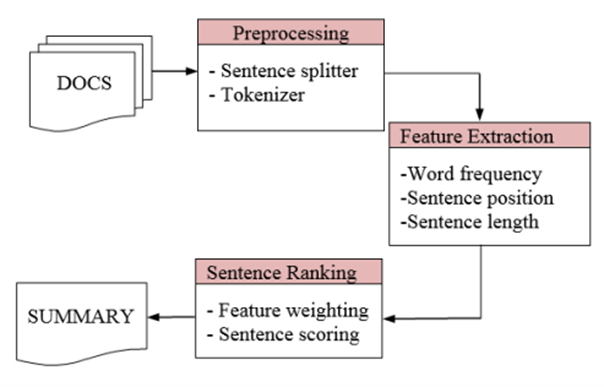

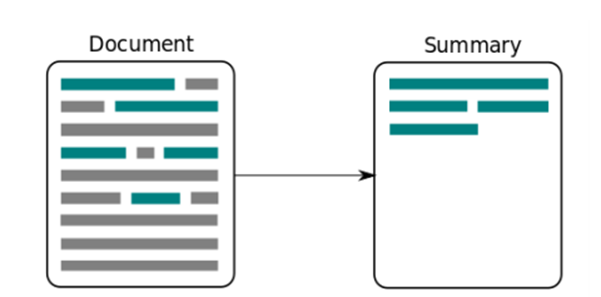

Extractive summarization works to extract near precise terms or key sentences from a given text. The processing pipeline is like that of a binary classification model that acts on every sentence. BertSum is a best-tuned BERT model with a focal point on extractive summarization. BERT is used as the encoder on this new architecture and has state of the art capacity in dealing with long inputs and phrase to phrase relationships. This pipeline of using BERT as a baseline and creating a site-specific fine-tuned model is famous as leveraging the baseline training can provide a massive accuracy raise within the new domain and requires fewer data points. The key exchange whilst going from baseline to BertSum is how to enter format modifications. We are representing single sentences in place of the entire textual content at a specific time, so we upload a [CLS] token to the front of every sentence in place of the whole document. When we run through the encoder, we use these [CLS] tokens to represent our sentences.

Text Summarization Algorithms and Tools Available Online

-

-

-

-

LexRank Summarizer

-

-

-

LexRank is an unsupervised approach that receives its inspiration from the same ideas behind Google’s PageRank algorithm. The authors say it is “based at the idea of eigenvector centrality in a graph representation of sentences”, using “a connectivity matrix based totally on intra-sentence cosine similarity.” Ok, so in a nutshell, it finds the relative significance of all phrases in a document and selects the sentences which include the most of these high-scoring words.

2. Luhn Summarizer

One of the primary text summarization algorithms was published in 1958 by using Hans Peter Luhn, working at IBM research. Luhn’s set of rules is a naive technique based totally on TF-IDF and looking at the “window size” of non-important words between words of high significance. It additionally assigns better weights to sentences near the beginning of a document.

3. TexRank Summarizer

The TexRank algorithm is another graph-based model which values sentences consistent with the sum of the weights of their edges to other sentences in the document. This weight is computed in the identical manner as it’s in the TexRank model.

Benefits of Text Summarization

The advantages of Text Summarization are beyond fixing the visible problems. Some different blessings of Text Summarization are as follows:

- Saves Time

By generating automatic summaries, textual content summarization facilitates content editors save effort and time, which otherwise is invested in creating summaries of articles manually.

- Instant Response

It reduces the user’s effort involved in exacting the applicable dat. With computerized textual content summarization, the person can summarize an editorial in only some seconds by using the software, thereby reducing their analyzing time.

3. Increases Productivity Level

Text Summarization allows the person to scan thru the contents of a text for correct, quick, and unique records. Therefore, the tool saves the person from the workload by means of decreasing the size of the textual content and growing the productivity level as the user can channel their energy to other essential matters.

- Ensures All Important Facts are Covered

The human eye can miss essential info; however, automated software does not. What every reader requires is a good way to choose out what’s useful to them from any piece of content. The automatic text summarization technique allows the consumer to acquire all the vital records in a document without problems.

Effective Daily Life Uses of Text Summarization

a. Financial Analysis

Investment bankers spend a lot of money and time on financial news because it influences their enterprise-decisions. It’s regularly difficult to decode the monetary information and that is why you could rely upon an AI-based summarizer that can simplify terms for you. The first step in this undertaking is to make use of a pre-trained model for extracting economic news. Perform tokenization on the text and keep it. Next, load the pre-trained model to generate a summary and visualize the result.

b. Blog Summarizer

Why waste it slow in reading irrelevant, lengthy blogs while you could study their summary and discover it? This idea is about constructing a blog summarizing application which can conclude the facts from prolonged blogs right into a hundred words paragraph. To enforce this NLP Text Summarization assignment idea, use Sumy version for automatic text summarization of HTML pages. Use NLP strategies like tokenization, stemming, etc. to preprocess the statistics earlier than feeding it to Sumy.

c. Summarizing Academic Papers

If you are a person who doesn’t like reading noticeably technical research papers, text summarization is for you. It objectives to build an application that summarizes the research papers’ content material. For the implementation of this newsletter summarization challenge idea, use the nltk library in Python. First, remove stop words from the text and create a similarity matrix to rank sentences inside the text. Then, pick pinnacle sentences that rank properly and use them to generate the precis of the textual content.

Wrapping Up

In Natural Language Processing, or NLP, Text Summarization refers to the procedure of the usage of Deep Learning and Machine Learning models to synthesize huge bodies of texts into their most critical components. Text Summarization can be carried out to static, pre-current texts, like research papers or news stories, or to audio or video streams, like a podcast or YouTube video, with the assistance of Speech-to-Text APIs.

- Umer Sufyan

- 19 December 2022