Most Popular MLOps Platform and tools

What is MLOps?

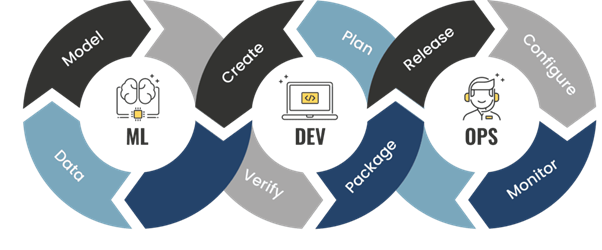

Machine learning operations, typically called MLOps, is an approach for establishing tactics, standards, and exceptional practices for machine learning models. Instead of pouring widespread time and assets into machine learning innovations and improvement without a plan, MLOps works to ensure the overall lifecycle of ML development— from ideation to deployment — is carefully documented and controlled for optimized consequences.

MLOps exists no longer only to improve the quality and safety of ML models, but also to document first-class practices in a manner that makes machine learning innovation greater scalable for ML operators and developers. Because MLOps efficaciously applies DevOps techniques to a greater niche region of technical development, some call it DevOps for machine learning. This is a helpful way to view MLOps, due to the fact like DevOps, it’s all about knowledge sharing, collaboration, and exceptional practices throughout groups and tools; MLOps offers developers, data scientists, and operations groups a manual for working together and developing the most effective ML models as a result.

Uses of MLOps Tools and Platforms

MLOps tools can perform a variety of duties for an ML team, however, generally, these tools and platforms may be divided into two categories:: individual component management and platform management. While some MLOps tools and platforms specialize in one core area, like data, records, or metadata control and management, different tools take a more holistic technique and offer an MLOps platform to manage numerous pieces of the ML lifecycle. Whether you’re looking at a specialized or more commonly used platform for MLOps, search for tools that help your team to manage these areas of ML improvement:

-

-

-

-

-

-

- Data management

- Deployment and ongoing renovation and innovation of ML models

- Modeling and Design

- Project and Workspace Management

- End-to-end lifecycle management

-

-

-

-

-

Best MLOps Tools & Platforms

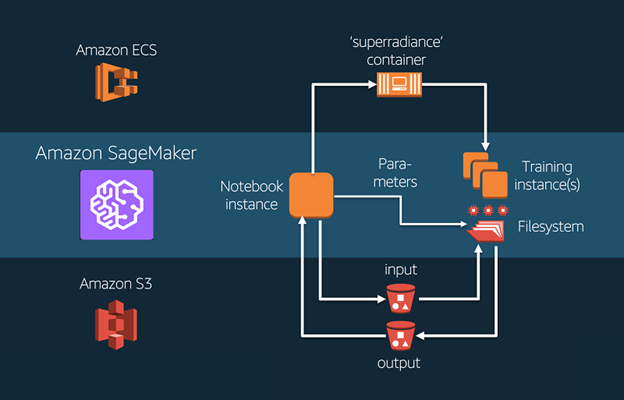

Amazon Sagemaker

Amazon SageMaker allows developers and data scientists to build, train, and deploy machine learning models at any scale fast and effortlessly. Amazon SageMaker removes all the boundaries that usually sluggish down developers who want to apply machine learning. Amazon Sagemaker has a couple of significant applications and use cases. It is used to create machine learning models for many organizations’ call center crew to research regularly raised consumer problems, widely accepted solutions. These models assist in lowering working price via automating and optimizing tactics with minimal manual intervention. The other use case includes product development which required decision making based on image processing.

Features:

-

-

-

- Automated bias, model drift, and concept drift detection

- Automated information loading, data transformation, model building, training, and tuning via Amazon SageMaker Pipelines

- CI/CD through source and version control, automatic testing, and complete end-to-end automation

- Workflow logging for training data, platform configurations, model parameters, and learning gradients

- Security capabilities for policy management and enforcement, infrastructure security, data protection, authorization, authentication, and tracking.

-

-

Pros

-

-

-

- Machine Learning at scale by means of deploying a massive quantity of training data.

- Kubernetes integration for containerized deployments.

- Accelerated records processing for faster outputs and learnings.

- Creating API endpoints to be used by means of technical users.

-

-

Cons

-

-

-

- The UI can be eased up a bit for use through business analysts and non-technical users.

- For a massive quantity of data pull from legacy solutions, the platform lags a bit.

- Considering ML is an emerging subject matter and might be utilized by a maximum of the businesses in the future, the pipeline integrations may be optimized.

-

-

MLflow

MLFlow is an open-source machine learning lifecycle management platform from Databricks, nevertheless presently in Alpha. There is also a hosted MLFlow provider. MLFlow has three additives, covering tracks, projects, and models.

MLFlow initiatives offer a layout for packaging data science code reusable and reproducible, primarily based overall on conventions. In addition, the Projects thing consists of an API and command-line equipment for walking initiatives, making it possible to chain tasks together into workflows.

MLFlow models use a preferred layout for packaging machine learning models that can be utilized in various downstream tools — for instance, real-time serving thru a REST API or batch inference on Apache Spark. The format defines a convention that helps you store a model in extraordinary “flavors” that can be understood through different downstream tools and platforms.

Features

- MLflow tasks to package data science code in a reproducible layout.

- MLflow models to set up models in varied serving environments

- MLflow tracking to record and question experiments.

- Model registry to store, annotate, discover, and control models in a single location.

- Compatible with many ML libraries, codes, and languages.

- Compatible with many ML libraries, codes, and languages.

Pros

- Parallel to the metrics logging, you can save your (trained) model, conda environment, and any other file that you deem essential.

- Through the MLFlow UI, you can access all the previous versions of the deployed model.

- With MLFlow Models, you are ready to send your trained model for deployment in a vast array of platforms

Cons

- Hard to deploy ML. Moving a model to production can be challenging due to the plethora of deployment tools and environments it needs to run in (e.g., REST serving, batch inference, or mobile apps).

- Unlike traditional software development, where teams select one tool for each phase, in ML, you usually want to try every available means (e.g., algorithm) to see whether it improves results. ML developers thus need to use and produce dozens of libraries.

Google Cloud AI platform

Google Cloud AI is used for computer vision-associated workloads company-wide for image classification and other related use cases. Google Cloud is an end-to-end, wholly managed platform for machine learning and data science. It has functions that help you manipulate service faster and seamlessly. Their ML workflow makes things easy for developers, scientists, and data engineers. The platform has many capabilities which support learning lifecycle management.

Features

- Google Cloud AI Platform consists of several resources which assist you in carrying out your machine learning experiments efficiently.

- Cloud storage and BigQuery enable you to put together and save your datasets. Then you could use a built-in feature to label your data. MLflow tracking to record and question experiments.

- You can perform your assignment without writing any code via the usage of the Auto ML feature with a smooth-to-use UI. You can use Google Colab to run your notebook free of charge.

- Deployment may be carried out with Auto ML features, and it may perform real-time movements on your model.

Pros

- Google Cloud AI is straightforward to set up without needing a lot of customization and configuration.

- It integrates thoroughly with Google BigQuery and Google PubSub, making it easy to have a ready-to-use pipeline from data ingestion to analysis.

- Google Cloud AI has out-of-the-box CV algorithms and video processing modules/APIs that make applying for image/video processing software and use cases easy.

Cons

- Customization of present modules and libraries is more complex, and it needs time and experience to research.

- Google Cloud AI can better provide a better guide for Python and different coding languages.

- Google Cloud AI can do a better job in providing better aid for Python and other coding languages.

Seldon

Seldon Core is an open-source platform for rapidly deploying machine learning models on Kubernetes. Seldon Deploy is an organization subscription service that lets you to work in any language or framework, in the cloud or on-prem, to install models at scale. Seldon Alibi is an open-source Python library enabling black-box machine learning model inspection and interpretation.

- Seldon is built on Kubernetes and is available on any cloud and on-premises.

- Seldon enables open tracing to hint API calls to Seldon Core. By default, Seldon supports Jaeger for distributed tracing to provide insights on latency and microservice-hop performance.

- Seldon is framework agnostic and helps top ML libraries, languages, and toolkits. It is tested on Azure AKS, AWS EKS, Google GKE, Digital Ocean, OpenShift, and so forth.

- Seldon offers customizable and

advanced metrics with integration to Grafana and Prometheus. - Seldon affirms full audibility with model input-output requests backed by way of elastic seek and logging integration. Metadata provenance allows tracing back each model to its

corresponding training system, metrics, and data. - Seldon supports advanced deployments

with runtime inference graphs powered through ensembles, transformers, routers,

and predictors.

Pros:

- Working on an interesting cutting-edge product masking both machine learning and cloud-native technologies.

- Great culture that encourages innovation, with the ambition to solve complicated

demanding situations in the machine learning operations space at scale. - Really

inclusive and open to suggestions culture

Cons:

- For some of the breadth of the function can be a project with you wanting to make

contributions more broadly than at a bigger company, this is not supported. - There are approaches that are not strictly described but since the team and product are growing speedy. On the one hand, this can be irritating at instances.

- For some of the breadth of the function can be a project with you wanting to make

Valohai

Valohai offers its customers a variety of pipelines, workflows, and other automated deployment solutions that simplify lifecycle control for more than one ML models right now. Many customers additionally pick out Valohai because of how its open API permits the tool to flexibly integrate

with outside hardware and equipment, consisting of preexisting CI/CD pipelines.

Features

- Hyperparameter optimization

- Machine orchestration

- Machine learning pipelines

- Version control for machine learning

- Model deployment

Hosted notebooks

Pros:

- Interface is easy and simple; it’s clean to define jobs using the yaml documents and creating jobs is even less difficult using the web interface.

- Valohai makes is notable clean to build automated deep studying pipelines and automatically take care of the version control of the experiments.

- Valohai facilitates us to streamline our Machine Learning model development.

Cons:

- Sometimes error messages are puzzling. Minor interface gaps.

- Standalone

app with laptop notifications might be excellent.

WanDB

Weights & Biases (WandB) is a python package that allows us to monitor our training in actual time. It can be easily integrated with popular deep learning frameworks like Pytorch, Tensorflow, or Keras. Wandb is an experiment tracking tool for machine learning. It assists anyone doing machine learning to keep track of experiments and share results with colleagues and their future selves.

Features

- Explain how your model works, display graphs of the way model versions stepped forward, discuss bugs, and show progress toward milestones.

- Track,

evaluate, and visualize ML experiments with five lines of code. Free for academic

and open-source initiatives - Add W&B’s lightweight integration

in your present ML code and speedily get live metrics, terminal logs, and platform

data streamed to the centralized dashboard.

Pros:

- Wandb is open source and free for academic research.

- If experiment tracking is the simplest functionality important and the price range

- is high, Wandb is the best preference.

- Collaborate in actual time.

Cons:

- Wandb syncs distinct local runs to the identical web app run

- Wandb offers experiment monitoring only, so if model deployments or every other

interactive functionality, including notebook hosting, are necessary, Wandb

might be not the fine choice.

- Umer Sufyan

- 30 May 2022

How to Make the Best Choice

Most ML platforms and tools offer a robust system with GUI-based tools to improve the ML workflow. Different tools might have varying design and workflows. Some systems are clean for novices. Azure offers a drag-and-connect option, easy for obligations like accessing, cleaning, scoring, and testing your machine learning data.

Few platforms and tools are genuinely very complex with regards to dealing with your ML workflow with Python coding and notebooks. There are pros and cons to every platform. It’s a private choice due to the fact irrespective of what platform you choose, your model accuracy will no longer fluctuate a great deal. Workflows are different; however, you may import your algorithm. Pricing is a crucial topic right here, as maximum of them have a pay-as-you-go option which lets you to pay only for the features you operate.

If you’re a solo Data Scientist, ML engineer or have a small group and need to try out a platform before deploying your models you could go together with the platform or tools which gives you a free trial or free credits. It will help you understand how things work and whether you and your crew are at ease in the usage of a platform.

References

- https://www.phdata.io/blog/mlops-vs-devops-whats-the-difference/

- https://wandb.ai/site

- https://medium.com/seldon-open-source-machine-learning/introducing-seldon-deploy-c390d11af20c