Data Drift Vs Concept Drift

- Umer Sufyan

- 17 March 2022

Nothing lasts forever, and youth is not eternal. This is like the second law of thermodynamics, which states that over time things tend towards disorder and increasing entropy. In the world of machine learning, this translates to a situation where a model’s predictive power worsens over time. Some models are trained in a dynamic environment over time; this means that the model’s performance can change. It is important to be aware of what your model is being trained on and when that data may change—for example, if you are training your model on weather data collected by the National Weather Service.

By contrast, if your model is going to be deployed in a continually changing environment, it should be re-trained when new data becomes available. Otherwise, its performance will degrade over time because of Model Drift.

Model Drift

Machines or algorithms trained using machine learning methods can sometimes create predictions that change over time. Model drift is caused by changes in the structure of the data or connections among input and output variables. It usually occurs when a model, which was trained on one set of data, is applied to another data set. With model drift, the model may continue to predict outcomes accurately for some time, but the outcomes will eventually become less accurate as the differences between the training and test datasets increase. Data drift and concept drift are two of the primary types of model drift.

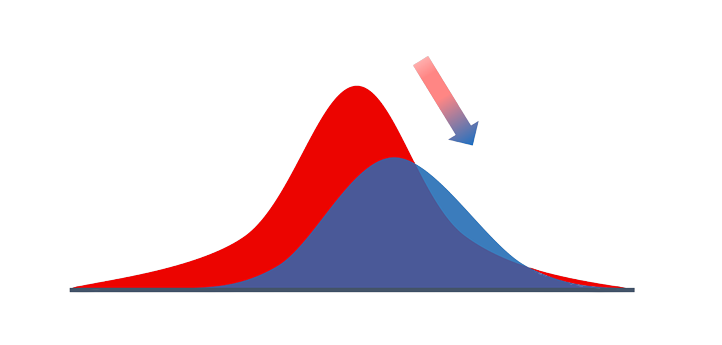

Data Drift

Data drift is a problem that can occur in machine learning when a model is trained on a certain data set and then used to make predictions on a different dataset. Thus, it happens when this set of data differs from what the model was originally trained on, and occurs as the result of changes in the original data. An example of data drift is when there is a difference between how two sets of data are labeled, or when there are changes in how a dataset is constructed (for example, if the training dataset is structured with the labels first and then the features, but the new dataset has labels last). Other factors that could result in data drift include changes in human behavior and differences in scale between datasets.

As more businesses begin to use AI and machine learning (ML) in their everyday practices, it’s important to understand the risks associated with these types of programs because data drift is one of the biggest potential issues facing these technologies. It can lead to poor performance by an ML model, which can have serious consequences for business-critical applications.

Let’s take a look at another example of data drift: let’s say you’re building a predictive model that should reveal how likely it is that you’ll win or lose at poker, based on your current hand and the cards on the table. You train your model by testing it against previous games, and it performs well, but then as you’re playing you realize that something has changed. Maybe there are suddenly more cards with hearts on them than before or fewer cards with diamonds. The model is no longer accurate and doesn’t seem to be able to learn about the new rules of the game!

Types of Data Drift

Data drift can happen for many reasons, and sometimes it’s hard to pinpoint exactly why it happens. However, there are some general causes that are worth considering when trying to mitigate its effects. But first, let’s focus on types of Data Drift.

Shift in the Target Variables – Prior Probability Shift

This is when the distribution of target variables changes over time. For example, it may be that in the past you were trying to predict if a person would be approved for a loan, whereas now you want to predict if they will default on their loan. The target variable has changed, as has the probability of that event happening. Shift in the Independent Variables – Covariate Shift

This is when the distribution of independent variables changes over time. For example, you could be predicted if a person would buy a product based on their income and gender. However, over time the average income of your customers’ changes or there is an influx of one gender. Here the distribution of independent variables has changed.

Concept Drift

This is when both the independent and dependent variables change over time. It is an issue that happens in ongoing learning situations where a man-made intelligence (ML) model has been prepared on a dataset that isn’t stable. For example, if you are preparing an ML model for stock market forecast, at that point you have to reconsider that its dataset will change with time since new information is consistently added to it.

Examples of Concept Drift

Since concept drift occurs when a predictive model changes its behavior due to the appearance of new data, there are several ways to identify it.

Forecasting and DeterminingIn many cases, data scientists try to create highly accurate models based on forecasting and determining the future trends of an industry or economic sector. If concept drift were not accounted for, then these predictions would become less and less accurate over time as business conditions changed or other factors came into play. For example, a company may have been able to predict future sales based on historical data from previous years but if they do not take into account changes such as new products entering the marketplace then their predictions will not always be correct anymore due to concept drift taking place within their dataset.

PersonalizationFor personalization purposes, concept drift could cause problems for companies that rely heavily on user analysis and other types of information about their customers in order to provide personalized experiences online or through mobile apps. If concept drifts occur with personalization algorithms then customers may experience.

Strategies to Detect Drift

Although it’s not always possible to completely avoid drifts, there are a few techniques that can be employed to help detect drifts as they occur and allow systems to adapt accordingly. These include using unsupervised learning methods like clustering algorithms such as K-Means Clustering or Gaussian Mixture Models with Expectation Maximization; monitoring unexpected changes in input variables’ distributions over time (e.g., if they’re normally distributed but suddenly become bimodal); implementing periodic retraining procedures so that models don’t become stale; utilizing robust statistics (e.g., median instead of mean) for processing data streams; using ensemble prediction methods like bagging ensembles instead of single classifiers/regressors, or real-time classifiers with decaying weights for setting up an evolving system. Drift is usually detected by monitoring the performance of a model by comparing it with a reference point. When there’s an error or change in the model’s performance, the drift is detected and an alert is sent to take action.

Aside from these, one of the most popular ones is known as adaptive windowing (ADWIN). It’s an algorithm that detects data drift over a stream of data. ADWIN works by keeping track of several statistical properties of data within an adaptive window that automatically grows and shrinks.

Detect Drift Type

How to overcome these drifts

To avoid problems with data and concept drift, you need to train your models regularly using fresh data. You can do this in two ways:

-

- Scheduled retraining: Set up a schedule for periodically retraining models at regular intervals (e.g., once a month).

- Automatic retraining: Implement a system that detects drift and retrains the model accordingly (e.g., when incoming data differs from expected values by more than 15%).

If retraining is not an option, Zliobaite et al. (2015) suggest using adaptive ensemble methods based on support vector machines or Gaussian mixture models.

Monitor the Working of Model

Static Models

Some basic ML models are static, which means they are not trained on new data. For instance, a classification tree is developed once on a training set and then used to make predictions. There is no further training. For static models, it is simple to check their performance continuously by applying new data and retesting them as needed.

Updated Models

Most ML algorithms have a step that updates the model with new examples after the first time they are trained. This step is known as online learning or incremental learning and proceeds until it reaches the stipulated number of training steps or until no change in the model’s performance can be seen with fresh training data. For these kinds of models, there will be two different types of data required for testing: training data and test data.

Recap

Over time, all kinds of things change. Mangoes go bad, the temperature gets warmer, and machine learning models lose their power to predict—a phenomenon known as model drift. In this article, we explored model drift, and the two most common causes of model drifting: concept drift and data drift.

Additionally, we talked about the types and examples of both types of drift, how to detect each type, how to check the working of each model, and finally how to overcome these drifts. And yet, regardless of this lengthy list of suggestions and strategies, the essential thing is to know the data. Each use case, model, and association is unique. There are times we cannot fix issues by basically applying the X or Y strategy. Ultimately, we should attempt to expect what might occur and assemble security measures to head off any future mishaps.